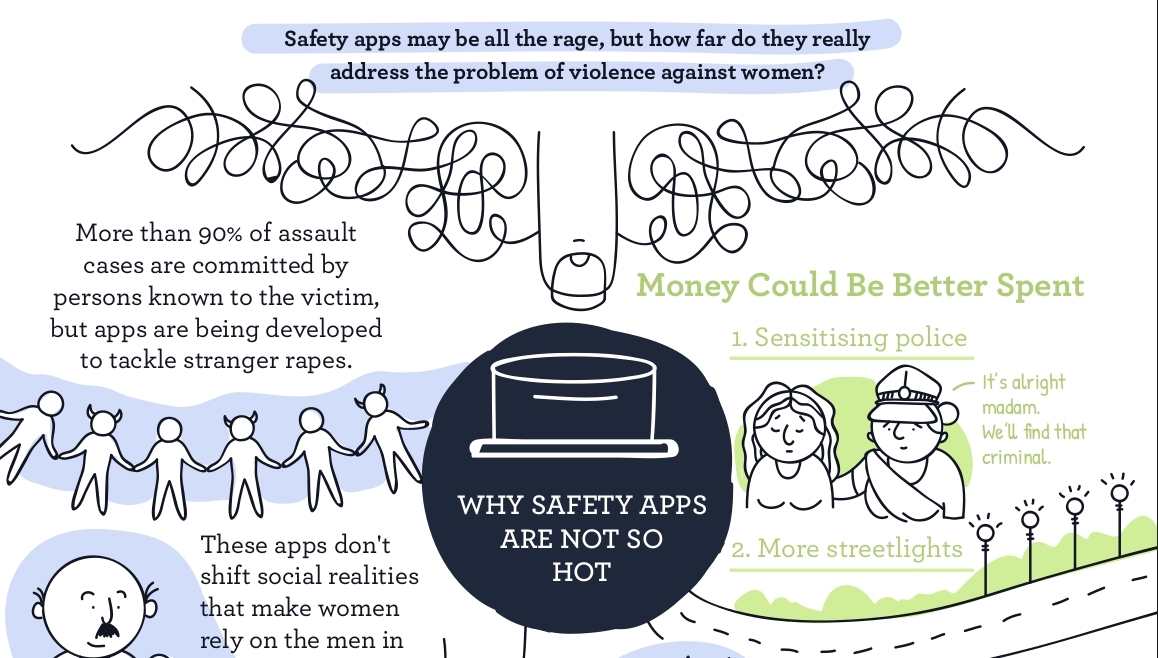

The number of apps that seek to make public spaces safer for women is simply startling. Safety apps are packaged as one of the top solutions to address the problem of high rates of crimes against women by so many different actors: police forces of different states, government departments, political parties, IIT students, winners of industry contests on the theme of women’s safety, wearables start-ups, entertainment businesses, telecommunication service providers, companies concerned about the safety of their women employees - they have all thrown in their bit.

In fact, a cursory search for ‘Nirbhaya’ on the Play Store alone will list over a dozen apps - the search word referencing the gang rape that took place in a public bus in Delhi and led to an upswell of sentiment about crimes against women in the country in December 2012. Many more apps can be discovered with a combination of some of these search terms: ‘women’, ‘safety’, ‘panic’, ‘button’, ‘sos’.

Image: Four of more than a dozen apps, named after what the victim of the 2012 Delhi gang rape came to be called. Ironically, a trusted person was already with the victim and police vans which were alerted have in an inquiry been found capable of a swifter response - one can surmise that the availability of an app in the young woman’s phone would have changed little in such a situation.

Given the number of actors who seem to think women’s safety apps are the answer to the chilling rate of violence against women, we wondered whether, when and how these apps are actually useful. We decided to assess fifty such apps to see what features the apps offer, whether their commitment to women’s safety is reflected in their data policies and how accessible they are.

But before we get into that, let’s first look at how well women’s safety apps take into account the context of long-ongoing fights against violence against women in the country. Do much-celebrated safety apps make use of the body of knowledge we have on violence against women in creating solutions?

1. Who wants to tell app developers about existing research on VAW?

Sadly, here is what we found: women’s safety initiatives in general and safety apps in particular do not meaningfully engage with male entitlement over women’s bodies or attempt to unseat male hegemony that underlies violence.

Most apps are aimed at making public spaces safer, and are designed to do so by reducing the time taken to contact someone in a dangerous situation. The latest data from the National Crime Records Bureau shows that less than 6 % of rapes in India are stranger rapes. This trend has been common knowledge for a while now, and so has the reluctance of State policies to engage with this uncomfortable truth. The enthusiasm and self-congratulatory fervour in designing safety apps aimed at women for use in public spaces does not take account the fact that most cases of sexual violence are perpetrated by persons known to the victim. Apps that make it easy to contact the police or a family member in a short time are of no use when cases of sexual assault by a known person can involve wilful ignorance by family members, reluctance of police to meddle in ‘family matters’, blackmail and any number of other complications.

Also, resources and public imagination spent on such quick-fix solutions can be better used to improve public lighting, sensitise police, hire people in understaffed areas for policing etc. Technology that patches a small gap in finding timely help cannot replace a wider effort to change societal attitudes that endanger women in the first place. A multiplicity of approaches working together to address different aspects of the issue is required.

Women’s safety solutions by the government deserve special attention, as oftentimes, problematic policies find a convenient excuse in women’s safety. Most memorably in this context, the Department of Telecommunications has made ‘panic buttons’ compulsory on all phones starting 1st January 2017. This has been mandated even for feature phones. But without a privacy legislation, this move could create a mechanism for law enforcement to track anyone with a phone in real-time, with an accuracy that was not possible with cell-tower data, fear experts. At the same time, no information has been disclosed about who would be the agency responding to alerts raised, and whether remote activation of GPS is possible. It therefore becomes hard to judge whether such a move is truly of any value to fight violence against women. It goes without saying that the policy does not equip users to function autonomously.

To be sure, products built for ‘women’s safety’, by government agencies and commercial entities alike, see no issues with such a dependance. If the product design doesn’t already give it away, mindsets which consider a woman’s dependence on her brothers, fathers and husbands for her safety a natural order of things, are unmissable in the marketing. See Sonata’s ACT watch, for example.

Image: Reliance’s Spottr is not marketed as a women’s safety app, but consider its messaging encouraging surveillance of women in the family. Image from here.

h/t https://twitter.com/HartoshSinghBal/status/807918033687584768

Table: A quick roundup of the main points that emerged from the apps we assessed

At a glance...

- We identified the different types of features that make up these apps, and found that most of them don’t do much to increase autonomy of the user. Features like constant tracking and geofencing are potent as tools for surveillance by partners and family members.

- We also identified different types of actors to whom the alert is sent to, like the police, or networked publics. It appears that the reliability of response in some of these options (like in case of alerts showing up on one’s Facebook feed) is quite dubious.

- Several privacy and security concerns emerged, as many of these apps don’t have a privacy policy, let alone policies making specific commitments about collection, storage and use of personal information. The apps that do have privacy policies are not always transparent about if and how personal information is used or monetised. This raises questions about whether the understanding of safety as mere bodily harm is sufficient, and whether disembodied effects of collection and use of data by these apps introduce fresh threats to safety.

- We found many accessibility issues for persons with visual impairment across apps.

2. So what features are on offer?

If you think safety apps could work for you, it's worth paying attention to the different features on offer and seeing which types work best for you. How much one is willing to compromise on autonomy for the sake of safety is not only different for different people, but also constantly shifting for the same person, depending on your circumstances.

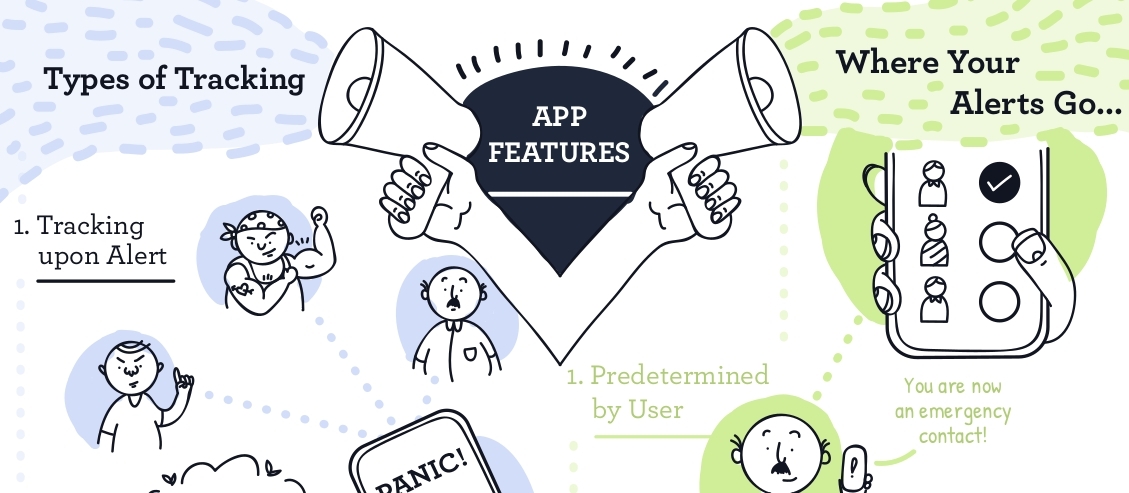

The thrust of all safety apps is that they leverage location information of the user in order to respond to situations of danger that the user may encounter. We grouped the main features under a few heads:

- Tracking upon alert (and additional information upon alert) The user is able to alert one or more parties when she finds herself in a dangerous situation. Usually, the information sent is the location of the user. In some apps, this information is supplemented with audio or video recordings taken during the time of alert, or some other additional information.

- Constant tracking The user’s location information is trackable in real-time by one or more parties whom the user chooses. This type includes a very popular feature referred to as ‘walk with me’, integrated in some google apps as well. It allows a remote contact to trace the user’s location constantly for a specified distance.

- Geofencing The user, or the ‘emergency contact’, selects an area on the map within which the user is expected to be. The user’s stepping outside of this area triggers an alert to the emergency contact.

- Heatmaps The user is alerted when a particular area is deemed unsafe. Such a determination is made on the basis of crowdsourced data for specific categories, or otherwise tagged data for different locations in the (usually) city.

Of the above features, the only one that does not involve being dependant on others for swift help is ‘heatmaps’. But crowdsourced information about which areas can be perceived to be ‘safe’ might be marked by class, caste and other biases. For example, an area predominantly occupied by members of a lower economic strata might be written off as unsafe compared to a gated colony with posh houses, even though the density of people on the streets might be higher in the former case. Safetipin, which offers heatmaps of some cities, avoids such biases to a large extent by having concrete parameters, like lighting and openness, on the basis of which users are encouraged to rate areas, along with some intangible factors like the feeling of safety that a place inspires.

Some circumstances might call for the use of an app that has the ‘Walk with me’ feature, while information available on heat maps might be useful to plan other outings. ‘Walk with me’ is a popular feature where a user can share his/her location with an emergency contact for a fixed route. The emergency contact can monitor if the user is going on the expected route and check on the person in case there’s a deviation from the expected route. ‘Walk-with-me’ could be useful when the user has complete choice in whether she wants to share her location with a person she trusts, for what purpose, and for what duration of time. But when an app enables constant tracking of the user by the emergency contact, it risks becoming a sort of digital leash that persons wishing to exert control on ‘their’ women wield.

Features like ‘geo-fencing’ enable a remote person to monitor movements of the user. Such a feature in the hands of restrictive family members can mean a freeze on the mobility of the user, extending surveillance that takes place within homes to the outdoors, and that too, from a distance. Indeed, it risks being a new form of lakshman rekha, brought to you by women’s safety initiatives.

Where apps send additional information like photos and videos upon alert to your emergency contacts or known third parties, it is crucial to be aware of what the app’s record with false positives is. Some apps allow for sending off an alert when you shake your phone, or press the power button twice. There might be situations where such alerts are triggered without you having intended it. It's undesirable for this to happen with apps that send only your location, and doubly so when photos and videos are involved.

Thinking about what your requirements for safety are, how much control and access into details of your life you’re willing to give your emergency contact, and when you wish to revoke access are useful to think about. If you choose to use an app that provides heatmaps similarly, something with concrete parameters is better could help you remain critical.

3. Where do the alerts go?

Most apps we tested involve the user reacting to a dangerous situation by raising an alarm to alert one or more actors. Depending on which app you choose, different actors will be involved in responding to the situation. We categorised these actors into three groups as they each involve distinct types of risks.

- Pre-determined contacts set by the user The app allows the user to select one or more contacts in her contacts list, and alerts are sent to these contacts. The contacts are notified when they are added as emergency contacts.

- Known third party The alerts are sent to a third party who is expected to respond to the situation, like police station control rooms or call centres of the app.

- Other ‘networked publics’ There is a logic behind where the alerts are sent , but there is no way of knowing who exactly the alert will reach or not reach. For example, one app posts alerts on the user’s Facebook wall. Another app sends alerts to users of the same app who happen to be in the same area, but there is no way of telling beforehand who these people actually are.

Some apps send alerts to a combination of the above types of actors.

There are power relations at play between women using these apps and the different people we are able to alert to call for help. Depending on your personal circumstances, some type of actors might be better suited than others to respond in an emergency. The police might be more ready to help a woman running into trouble at a busstop than a drunk teenager facing trouble in a nightclub. If you are a transwoman, or a member of a marginalised group that has a history of not finding favour with the local police, they might not be your emergency contact of choice either.

User-determined emergency contacts are preferable for some over known third parties. Even within your contact list, you might wish to enlist a sympathetic female friend with a car over the husband who thinks it is unwitting to walk on a lonely street in clothes he doesn’t approve of. Given the variability of these personal circumstances, different apps work for different folks.

Alerting pre-determined contacts that you set yourself might at first seem to enhance user-choice, but it is very much possible that such contacts are husbands, brothers or other members of your family who extend surveillance at homes to public spaces.

Apps like these often seem to forget that. Google recently announced its dedicated safety app called Trusted Contacts. While the app doesn’t target women specifically, what's interesting is one of its features: when a trusted contact is worried about the user, he/she can request for the user's location. If the user happens to not respond (or is not able to respond), their location gets shared automatically. Such a feature could disproportionately affect women who have several restrictions imposed on them by family members about what is a legitimate time, place and purpose to be out.

One can similarly predict the biases of ‘known third parties’ - police and helplines linked to applications all fall under this category. You might not be comfortable having to reach out to the police if you are a sex worker who is being stalked by a client, given institutional histories of biases against some groups.

With networked publics, it is hard to gauge the reliability of responses. There may be no way of telling the intentions of parties who are now in possession of information about you. A user of the same app in the neighbourhood might be well placed to reach you in a short duration, but who’s to say whether such a user is going to get there at all? Similarly, If you think your best shot at finding a quick responder is by sending a shout-out on your Facebook, then these apps cater to you. But , with the likely lack of context, it is not a given that friends on facebook or followers on twitter will take an alert seriously, and act upon it.

4. Are you “safe” when data about you is not safe?

At the time of downloading, all apps we assessed required permissions far greater than what the app really needs access to in order to function. Apps that are meant to send location information to a few contacts demand access to your media files, identity information, device ID and call information and half a dozen other fields. This is a trend across apps.

Thirty two out of the fifty apps that we assessed did not link to a privacy policy in the Play Store page. None of the government-offered safety apps, except the Jharkhand Police’s ‘Shakthi’, has a privacy policy. Where privacy policies were present, either on the Play Store listing or on the developer’s website (including in the case of Shakthi), they were woefully lacking in information about what data they collect, whether they store, process and sell the data, etc. In fact, the developers of one of the apps without a privacy policy offers analytics in e-commerce, retail, healthcare and government services sectors. This means that the developers are free to use data about you, infer patterns from your data and sell it to interested parties.

It might be an industry-wide practice to harvest personal data of users and sell it in a lucrative marketplace. Companies like Facebook have been reported to create micro-detailed profiles of users on the basis of their preferences, page visits, etc. So perhaps it is not surprising, then, that safety apps are doing the same?

Maybe not, but it is ironic. How can apps claim to enhance your safety if they are treating your sensitive personal information with complete complacency or even disdain?! In the absence of privacy protections in law and widespread industry practice eschewing responsibility, users of mobile phones are left with the imperfect solution of tweaking their own behaviour to reduce their data footprint. For example, location information, being sensitive data, should be turned off after specific uses, say security gurus. Yet safety apps encourage users to do the very opposite, as their very premise is that users should leave their location information on in anticipation of danger. At the same time, the people who develop and market these apps make no commitment about keeping your data safe.

5. Accessibility of safety apps

There is one more area we need to look into: if so much money is spent on safety apps, how accessible are they actually?

We tested for three fields of accessibility for the visually impaired: whether the colour contrast of text and images is according to standards, whether all elements are labelled such that the app is readable by a screen-reader and whether touch targets are large enough or not. This assessment was done with the help of the ‘Accessibility Scanner’ app.

Sadly, none of the 50 apps were fully accessible with a screen reader, as all elements were not labelled. Colour contrasts did not meet the standards required for the apps to be accessible to partially-blind persons. Also, touch targets on apps requiring users to press a button to raise an alert were not large enough, making these apps unusable for partially and fully blind persons.

This adds visually impaired users to a long list of women who are disqualified from using the available app-based solutions. Women who do not own a smartphone, who cannot afford mobile data, who do not live in places with good connectivity, who cannot speak English - none of them are envisaged as users of safety apps.

That leaves us with urban, able-bodied women who can afford smartphones and data packs. Even then, the terms and conditions of these safety apps clearly state that the app absolves itself of any responsibility for your safety.

Having said all that, if you think you need to use a safety app, then it is worth examining if any one of them is suited to your unique circumstances. We all trade a bit of autonomy and privacy in exchange for safety and security, but you don’t want to use an app that harms you instead of helping you - even when you’re not being assaulted!

6. How we went about the assessment:

We selected 50 apps that are representative of the types of solutions on offer. We included apps that are not developed specifically for Indian markets, but are claimed to be popular here. Apps with very few downloads that did not offer additional features were excluded. We also included one app that is not specifically marketed towards women, but has the maximum number of downloads and finds mention in articles that list the best women’s safety apps. We also chose some apps that have only 1000+ downloads, as they were interesting for other reasons like the profile of the developers, or exemplary of promoting stereotypes about women etc. We then examined all apps for the data points discussed above. You can find the table here (licensed under Creative Commons BY 4.0).

Read next

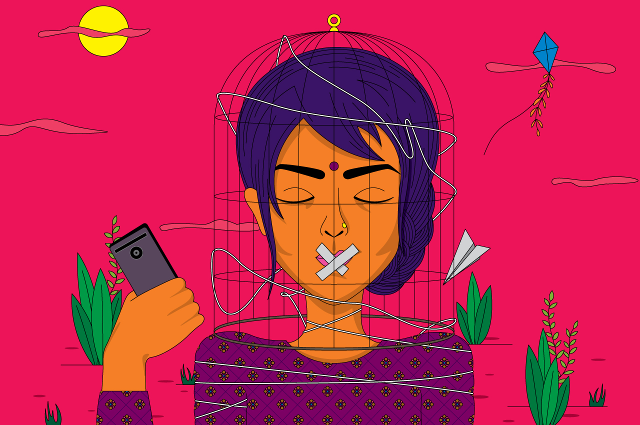

‘Chupke, Chupke’: Going Behind the Mobile Phone Bans in North India

Since 2010, a number of khap panchayats across north India have pronounced bans on mobile phone use for young women. What drives such orders? Are they really effective? And how do young women themselves respond to the bans and the underlying anxieties? We went to Haryana and western UP to find out.

Caution! Women at Work: Surveillance in Garments Factories

Garment factory workers, predominantly women, work in stressful conditions in an exploitative industry where the pay is low. Read on to find out what CCTV cameras have done to the dramatic imbalance of power between workers and the factory management, and what role they can play in workers’ fight for an equitable workplace.